Measuring what matters: GenAI KPIs for banking success beyond accuracy

Dr. Mazhar Javed Awan (Senior Managing Consultant - Data Science)

October 09, 2025

Step 2: Establish Baselines & Measurement Mechanisms

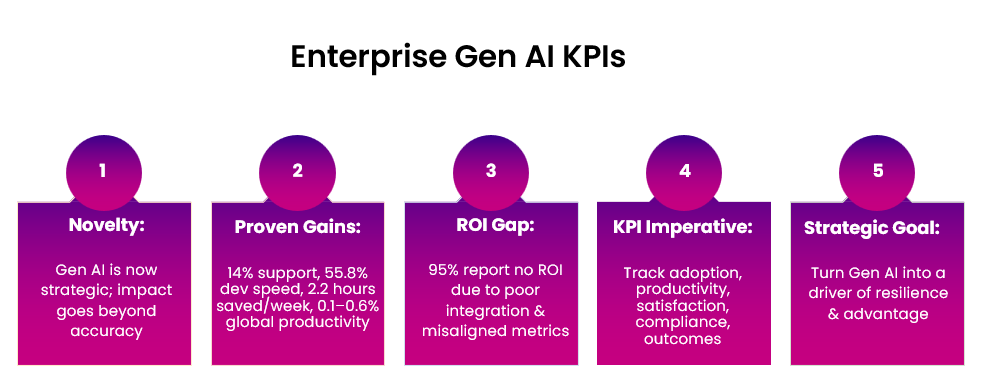

In recent years, Generative AI (GenAI) has moved well beyond the realm of novelty emerging as a strategic imperative for global financial institutions. As banks and fintechs integrate GenAI into everyday operations, they are forced to ask: How do we measure success beyond model accuracy? Traditional performance metrics, like precision, recall, or F1 scores, only tell us if a model is technically correct. But enterprise value lies in outcomes operational, human, and financial.

Empirical research confirms GenAI’s transformative potential. A study of customer support teams found that access to AI assistance raised productivity by 14%, enabling agents to resolve more issues per hour, with especially significant gains for less-experienced staff [1] [2].

Similarly, controlled experiments using GitHub Copilot showed developers completing tasks 55.8% faster with AI assistance [3]. On a macro scale, workers using GenAI report saving an average of 5.4% of work hours per week, translating to about 2.2 hours saved weekly per user [4] [5]. Economists anticipate that GenAI could boost global labour productivity by 0.1 to 0.6 percentage points annually through 2040, with automation driving even higher gains [6] [7].

Yet, many AI projects fall short of delivering tangible business returns. Studies show that 95% of organizations report no measurable ROI on their GenAI initiatives often because pilots lack integration into workflows, use cases remain vague, and success metrics are misaligned [8].

Therefore, banking leaders must embrace a holistic KPI framework, one that measures adoption, productivity, satisfaction, compliance, and economic outcomes. Only then can GenAI transition from an experimental toolkit into a consistent driver of operational excellence, resilience, and competitive differentiation.

2. Why expand KPIs beyond model accuracy?

While traditional AI evaluation emphasizes technical metrics such as accuracy, precision, recall, and F1 scores, these measures alone fail to capture whether GenAI is delivering value to the enterprise. A growing body of research underscores the limitations of model-centric evaluation and emphasizes the importance of human-centric, productivity-driven, and compliance-oriented KPIs.

2.1. Model accuracy ≠ Business impact

- The "accuracy paradox" illustrates how highly accurate models can fail in practice when integrated into misaligned workflows or when user trust is lacking. AI often highlights inconsistencies within existing processes, making accurate models appear less useful in real-world operations [9] [10] .

2.2. Productivity gains over marginal accuracy

- Evidence shows that GenAI’s economic benefits stem more from enhancing productivity than from incremental accuracy improvements:

- In a landmark field experiment, customer support agents using GenAI assistants resolved 14% more issues per hour, with the largest gains (up to 35%) seen among less experienced agents [11] [12] [13].

Separate research found software developers completed tasks 55.8% faster with AI pair-programming tools—without any compromise in correctness [14] . - The accuracy-focused mindset underestimates the value of time saved, worker enablement, and task acceleration—core benefits that expand enterprise value.

2.3. Adoption & Trust Drive ROI - High-performing AI models often fail to yield ROI when end-users don’t trust or adopt them. Research shows that interpretability alone doesn't build trust; instead, outcome transparency (feedback on impact) is more effective at enhancing adoption [15].

- Tools that offer clear, intuitive outputs see higher adoption a necessity if AI output is to benefit real-world operations.

2.4. Governance, Compliance & Ethical risks require their own KPIs

As GenAI becomes more pervasive, it's not enough to measure only performance. Robust KPIs must include:

- Hallucination frequency (incorrect AI-generated content).

- Bias and fairness metrics (e.g., demographic parity).

- Compliance violations (e.g., privacy breaches, misinformation).

Regulatory frameworks such as the NIST AI Risk Management Framework advocate measuring trust and safety—not just technical accuracy [16][17] .

3. KPI categories for banking: Capturing GenAI's true value

Financial institutions must move past model-centric metrics and adopt a multi-dimensional KPI framework to fully realize the value of GenAI. Below is a refined, banking-focused breakdown of the core KPI categories each supported by global insights and industry data.

a)Model quality

- What to Measure: Traditional metrics precision, recall, F1-score are essential for tracking baseline performance.

- Beyond the Basics: Benchmarks like LLM self-assessment (e.g., pairwise preference scoring) can augment evaluation rigor.

- Key Insight: Strong accuracy alone doesn’t guarantee real-world gains without adoption and workflow alignment.

(b) Adoption & utilization

- What to Measure: % of staff using GenAI tools (e.g., RMs, compliance); production rollout rates.

- Why It Matters: Even accurate models fail if adoption is low. Bain reports ~20% average productivity gains in financial institutions where GenAI is actively used.

- Banking Insight: By mid-2024, 75% of banks were deploying “at least one” GenAI application, with 40% in production.

World Wide Technology

(c) Productivity & efficiency

- What to Measure: Time saved per task (e.g., KYC processing cycle time); tasks/issue resolution per hour.

- Global Data: GenAI users saved an average of 5.4% of weekly work hours about 2.2 hours per week.

- Banking Example: Indian banks could boost operational efficiency by up to 46% via GenAI.

- EY Insight: GenAI in banking is enabling automation of sales activities (66%) and accelerating innovation (54%).

(d) Business impact & innovation

- What to Measure: Revenue uplift tied to GenAI (e.g., personalization cross-sell), cost savings, and innovation speed (time-to-market).

- Global Scale: McKinsey estimates GenAI could contribute $2.6–4.4 trillion annually, with banking among the top sectors.

- Accenture Insight: Early adopters report 20–30% productivity improvements, up to 6% revenue growth, and notably higher Return on Equity.

(e) Employee & customer satisfaction

- What to Measure: NPS post-GenAI interaction, internal satisfaction scores, perceived workload reduction.

- Global View: Employees using GenAI report lower cognitive load and higher job satisfaction.

- Banking Behavior: JPMorgan’s internal GenAI tools increased advisory productivity over threefold and reduced servicing costs by nearly 30%.

(f) Governance, compliance & risk

- What to Measure: Frequency of hallucinations; bias evaluations; policy or regulatory breaches.

- Why It Matters: Foundation models bring risks from hallucinations to fairness concerns. Rigorous KPIs ensure GenAI remains trusted.

- (g) Strategic & ESG-aligned metrics

- What to measure: Carbon footprint per model inference; sustainability contributor scores; ethical incident reductions.

- Global sustainability insights:

Efficiency of AI operations is emerging as a governance and ESG metric but detailed financial-sector data is still developing.

Integrated KPI dashboard for GenAI in banking

| KPI category | Example metrics | Why It matters |

| Model quality | Precision, F1-score, LLM preference scoring | Baseline technical integrity |

| Adoption & utilization | % of users, production use cases, departmental rollout | Ensures model usage translates into impact |

| Productivity | Time saved, tasks/hour, efficiency gains | Reflects operational improvement |

| Business impact | Revenue lift, cost reduction, ROI, innovation velocity | Tracks tangible strategic value |

| Satisfaction | NPS, employee satisfaction, cognitive load reports | Measures user trust and tool usability |

| Governance & risk | Hallucination rate, bias audit counts, compliance infractions | Ensures safe, ethical, and compliant operations |

| Strategic / ESG | Carbon cost per call, sustainable deployment ratios | Aligns AI with strategic and environmental goals |

4. Banking AI use cases & KPI mapping

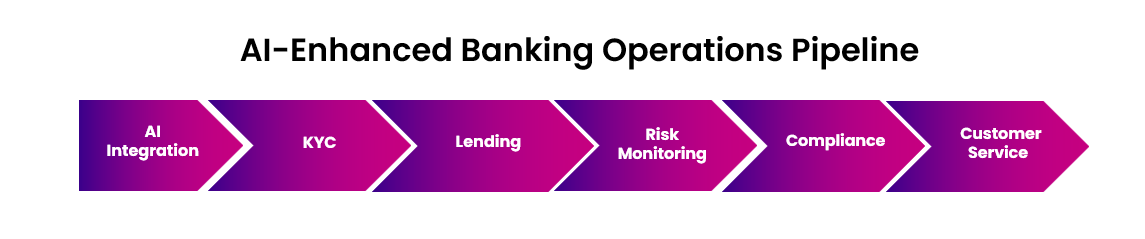

Banks around the world are increasingly embedding AI into their core operations risk, fraud, underwriting, customer service to drive faster decisions, reduce losses, and deliver better experiences. For example, Mastercard’s Decision Intelligence platform uses AI‐powered decisioning to catch fraud and anomalous behavior in real-time, substantially reducing false positives while improving detection rates.

Similarly, fintechs and banks are automating KYC/document verification, optimizing underwriting models with alternative data, and deploying virtual assistants to handle frequent customer queries outside normal business hours. These are more than technical experiments the real value emerges when we track how these use cases affect business metrics like fraud losses prevented, underwriting default rates, processing times, customer satisfaction, and revenue uplift.

Yet too often, banks still focus primarily on traditional model metrics accuracy, precision, recall and treat deployment and adoption as afterthoughts. This narrow view misses what drives impact. Mapping use cases to KPIs brings clarity: it helps board members see what really moves the needle, enables teams to measure meaningfully, and lets institutions move from pilot projects to enterprise-scale AI.

A. Predictive analytics use cases

| Use case | Real-world example | Outcome / Benefit | Key KPIs to track |

| Fraud detection | MasterCard’s decision intelligence | Detects fraud in real time with lower false positives [19] | Detection rate, false positives rate, fraud value prevented, response latency |

| Credit Scoring / Lending | Upstart lending platform | Approves 27% more borrowers at 15% lower interest rates | Approval rate increase, default rate, processing time reduction |

| Risk monitoring | IBM Watson for risk | Predicts operational risks across internal banking processes | Risk event reduction, anomaly detection accuracy, ops savings |

B. Generative AI use cases

| Use case | Real-world example | Outcome / Benefit | Key KPIs to track |

| Conversational banking | Bank of America’s "Erica" assistant | Handles 18+ million interactions, available 24/7 [20] | Usage volume, NPS change, average handling time |

| Automated compliance reporting | NICE Actimize AML/KYC GenAI platform | Automates dynamic risk reports; enhances compliance | Time saved, compliance error rate, throughput |

| Contract & Document Drafting | JP Morgan’s COiN (Contract Intelligence) | Summarizes legal docs in seconds | Doc review time saved, use frequency, error rate |

C. Agentic AI use cases

These emerging "agentic" systems act autonomously across banking functions, offering proactive, context-aware value:

| Area | Example capability | Benefit | Key KPIs to track |

| Personalized offers | Dynamic financial advice & optimized offers | Tailored product recommendation at scale | Cross-sell lift, personalization ROI |

| Risk & underwriting | Real-time risk profiling and default prediction | Smarter, adaptive credit risk decisions | Default rate reduction, detection speed |

| Fraud prevention | Contextual anomaly detection across workflows | Real-time prevention with nuanced context-aware analysis | Fraud metrics, detection latency |

| KYC / onboarding | Adaptive identity verification | Faster and safer customer onboarding | Onboarding time, error rate |

5. Implementation: Building a KPI framework for banking GenAI

Crafting an effective KPI framework for GenAI in banking demands a structured approach one that connects strategic goals, operational benchmarks, and human adoption through measurable indicators. Research consistently reveals that AI’s greatest failure point isn’t the models themselves, but rather the absence of clear, aligned metrics that link AI to real enterprise value. Here are the tailored steps banks should follow, enriched with global insights and real-world banking practices:

Step 1: Align KPIs with business objectives

- Define Strategic Success: Begin by aligning KPIs with what matters most cost reduction, revenue growth, customer satisfaction, or compliance.

- Collaborative Design: Involving stakeholders across functions ensures KPIs reflect operational realities, not just technical ambition. For instance, some banks prioritize compliance frameworks, while others emphasize customer engagement or product innovation.

Step 2: Establish baselines & measurement mechanisms

- Benchmark Before AI: Document baseline performance metrics loan processing times, fraud review durations, call center handle times to measure improvement post-AI adoption.

- Use Real-Time Tracking:Implement dashboards, logs, and user surveys to continuously monitor impact. Continuous tracking enables trend detection and proactive course correction.

Step 3: Create a balanced scorecard

- Balanced Approach:Adapt Kaplan & Norton’s framework to integrate

- Technical: Model accuracy, hallucination rates

- Operational: Adoption rates, productivity improvements

- Strategic: Business impact, innovation velocity

- Customer & Employee: Satisfaction and empowerment

- Governance: Risk incidents, compliance markers

- Banking Use Case: Studies show Balanced Scorecards mediate the relationship between digital adoption and improved bank performance, making them a compelling tool for strategy alignment

Step 4: Anticipate the “J-Curve” of productivity

Expect an initial dip in productivity short-term friction is common as teams adapt to AI. However, substantial gains typically follow as workflows stabilize.

Step 5: Review, iterate, and scale

- Dynamic Metrics: As models improve and business context evolves, KPIs must adapt too. Quarterly reviews help refine goals and recalibrate focus.

- Scale with Governance: A sustainable operating model combines central GenAI oversight with aligned change management across departments

Sample KPI implementation roadmap for banks

| Phase | Key activities |

| Foundation | Define business priorities; co-design KPIs with stakeholders |

| Baseline Setting | Capture key metrics before AI: processing time, NPS scores, error rates |

| Scorecard launch | Build a BSC aligned to technical, operational, strategic, customer, and compliance metrics |

| Pilot phase | Implement AI tools in controlled environments; monitor adoption and productivity trends |

| Stabilization | Expect short-term productivity dips; collect feedback and insights during this adaptation phase |

| Scale & Governance | Institutionalize reporting, hold quarterly KPI reviews, update metrics, expand adoption responsibly |

6. Evidence & real-world insights: Banking GenAI in action

While Generative AI holds vast promise for banking, real-world studies reveal a significant gap between expectations and measurable outcomes. Below, we explore key findings from research and industry, contextualized for financial services.

6.1. Task-level productivity gains

In a study involving over 5,000 call center agents, AI-powered copilots increased issue resolution rates by approximately 14%, with the most notable improvements among less experienced team members [23] [24].

Experiments with AI pair-programming tools showed that developers completed coding tasks 55.8% faster, with no loss in quality.

While not banking-specific, enterprise cases have shown up to 30% improvement in productivity for IT admin tasks using GenAI copilots. Such workflow gains are directly transferable to back-office banking functions like fraud review, KYC processing, and compliance monitoring [25]

6.2. Enterprise-Level ROI challenges

A striking 95% of enterprises report no financial return from GenAI pilot programs, according to MIT's “GenAI Divide” study. Only about 5% reached production with measurable ROI [26] [27] [28].

The same research highlights that pilot-to-production dropout rates are steep, with only one in five custom tools reaching pilot stage—and far fewer making operational impact [29] [30].

6.3 The “J-Curve” of GenAI adoption

Productivity gains often follow an initial dip due to learning curves and workflow change resistance. This intuition aligns with the productivity J-Curve effect seen when new GenAI tools are introduced [31]

6.4 Omdia market study highlights

In surveyed organizations deploying enterprise GenAI:

17% realized value through productivity gains

22% reported improvements in customer engagement

Only 9% achieved measurable financial ROI at scale

6.5 Strategic lessons for banking

- Adoption Trumps Accuracy Tools that achieve high usage in banking like chatbot interfaces for customer service or document automation in lending tend to yield more ROI than technically optimal tools with poor uptake.

- Balanced KPIs Are Essential Metrics must span adoption, efficiency, satisfaction, financial impact, and compliance not focus solely on cost savings or time reduction.

- Treat AI as a Workforce Multiplier Successful banks position GenAI as a tool that enhances human work, rather than replacing staff. Empathetic, augmentation-first deployment wins trust and scales value.

Summary table: Banking GenAI insight snapshot

Insight category Key finding Task-Level productivity Agent productivity ↑ 14%; coding tasks completed 55.8% faster ROI realization 95% of enterprise GenAI pilots fail to translate to financial return Adoption curve Early dips in performance can precede strong gains with sustained usage Value capture Productivity and engagement lead; only a minority see direct financial ROI Strategic musts Adoption, balanced metrics, and human-centered deployment are vital

Quick Link

You may like

How can we help you?

Are you ready to push boundaries and explore new frontiers of innovation?